You might think about that it won’t be something new to you, but Lift and Shift is one of the most huge issues which you can see in middle size to large companies world wide. I was this week participating as a speaker within the Cloud Native Conference by Vogel IT-Akademie and after my talk this was one topic which was being discussed within a small group. Technically the idea is clear, the path as well, but still it’s a huge issue. Lift and Shift is very easy to achieve, especially because you got a variety of tools on the market which support you in this way, and this don’t need to be necessarily wrong as it always depends on the use case. That there is in fact an issue is clearly visible as around 70% is the overpayment companies have to pay, by information of Gartner, if they aren’t able to conduct a proper migration which doesn’t mean lift and shift. In addition nearly 60% of enterprises, by Flexera’s 2023 State of the Cloud report, see a need of optimising the costs in cloud, which shows a clear issue while migration into the cloud. While promoting ‘lift and shift’ as a state-of-the-art approach for modernizing infrastructure towards the cloud, we inadvertently perpetuate the legacy burdens that hinder cloud adoption. This practice results in inefficient cloud journeys and financial loss. To truly harness the benefits of cloud computing, it is imperative to move beyond mere replication of on-premises environments and instead focus on comprehensive modernization strategies that align with cloud-native principles. Sascha Lewandowski – Cloud Architect There are as well prominent examples which are facing issues while there journey into the cloud. The most prominent example where cloud was going 0 -> 100 & 100 -> 0 is Dropbox. They tried to be quickly inside the cloud while going the lift and shift approach which made them facing the trap of costs. Dropbox eventually decided to go back into the own datacenter to learn from the issue and prepare a proper migration into the cloud. Another example was GE (General Electric) which were facing a huge financial impact after starting initially with a lift and shift approach. Lift and Shift is always coming with a group of drawbacks but technically can be wrapped into this five categories. The necessary take away is, that it is never too late to really think about a proper deducted and controlled migration which might have still some parts of Lift and Shift, but mostly utilizing the benefits of a cloud native approach is not only financially beneficial, but as well by time as using the shared responsibility framework which cloud provider introduce it is possible to cut maintenance costs. To really get the best take out, it is mandatory to follow some steps while preparing your migration In conclusion, the journey to the cloud requires careful planning and execution. While lift and shift can be a part of the strategy, it’s crucial to focus on a cloud-native approach to truly harness the benefits of the cloud. Learning from the experiences of companies like Dropbox and General Electric, we see the importance of optimizing cloud usage to avoid financial pitfalls and maximize efficiency. By following a well-structured migration plan and leveraging the shared responsibility model offered by cloud providers, organizations can reduce maintenance costs and achieve long-term success in their cloud endeavors. Remember, it’s never too late to re-evaluate your migration strategy and ensure it aligns with your business goals and operational needs. A thoughtful, controlled migration will not only save costs but also position your company to fully exploit the advantages of the cloud. Image by Gerd Altmann from Pixabay

Why I’m working also on weekends!

Some of my friends and also colleagues ask me very often, why I’m working also on weekends or maybe on national holidays. This free time I could use better, but definitely different to what I’m doing. Sometimes they also tell me – “Hey you, you are nearly always online, isn’t it?”. Yes I’m, and yes I do! So what? Mind your own business! But this answer is on one hand really aggressive and far away of being kind and nice to others. Basically there are some reasons why I do and ongoing one point, I would like to remove from argumentation, because it is definitely wrong. Let’s start with the point, what I think is deeply wrong, before we are going over to why I’m working also on weekend’s. 🙁 Negative If you are talking with someone about work on weekend they would imagine a guy who isn’t able to make his work totally in his working time. Means, this guy won’t be able to organize himself and a time planner is something what we would present. Let’s take a look deeper, because time management is one hand, and influences around are the other hand. Finally a perfect time plan will work only, if there is enough space between appointments/things what need’s to be done, while the influence by others is on a weight. 🙂 Positive If we take now a deeper look on the opposite, we can see, there are a lot of reasons, why someone could and want to work on weekends. I think in my personal way the most necessary reason is my profession. As Linux Administrator and Cloud Engineer, the best time for me to work is, when other people sleep. Basically this means in the night, or on weekends. Another point is, that working without influence is more deep and way more efficient. I can see in my own working area, that working concentrated in the eve or over the night makes me doing more than over the whole day. Especially coop working over the night will pushes more to the result. As third point I would like to say that I’m working inside a profession with a mission critically environment. This means that if I would do the work over the day, it will influence other departments and possibly the whole structure of earning money in the company. This influence is not acceptable and won’t work at all over the world. What will be the result now? As you can see, night and weekend work is deeply necessary, and I can tell you, it’s not only connected with me as Cloud Engineer, it’s all over the world in different professions. Just take a look around you, when you are next time at 12am in the center of a larger city. Take a look on who is still working and you will see, IT-Engineers aren’t really lonely while working in the night. But we should never forget, that the base for working on weekends is will. And this is connected with benefits like money of course, but more over it is connected with the passion inside the field where you are working. If I will connect this on me, there won’t be something more relaxing than working together with technology, or experimenting on it. As result of this I could say, I’m working on “off-days” because I have fun while working and my passion and profession ist connected. This results also in fast and efficient work.

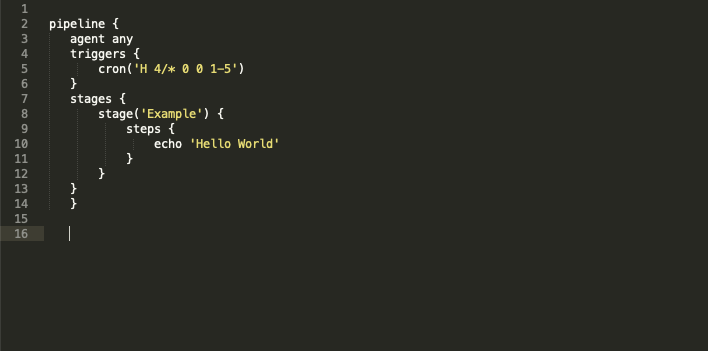

Using properties in Jenkins scripted pipeline

The open source CI/CD software Jenkins got a really cool integration of pipelines. If you are using the version 1.x you have to install a pipeline plugin, but it is highly recommended to use the newer version 2.x. In this version, you can also install the new UI called “blue ocean”. It’s a new way to display pipelines and the actions inside them, but let’s get back to topic. Jenkins scripted pipeline as engine for automation If you are using Jenkins pipelines (version 2.5+) within multibranch, the best way is via Jenkins file inside a git repository, you can’t edit the settings of the job. It is only possible to take a look at it, but you can’t save your changes. It’s possible to issue some lines inside the pipeline syntax to take control of these properties and settings. Therefore the is a special part of the syntax, only for properties in front of the job, but you have to take a deeper look on what type of pipeline you are using. Jenkins provides two types of pipelines. First one is the declarative pipeline and Jenkins.io describes it as follows: “Declarative Pipeline is a relatively recent addition to Jenkins Pipeline which presents a more simplified and opinionated syntax on top of the Pipeline sub-systems”. You can describe the agent, options, parameters or triggers. Everything is in the top part of the syntax, so you can be sure it’s loaded when your pipeline will start. The other side is the scripted pipeline as Jenkins.io described it like this: “Scripted Pipeline, like Declarative Pipeline, is built on top of the underlying Pipeline sub-system. Unlike Declarative, Scripted Pipeline is effectively a general purpose DSL built with Groovy. Most functionality provided by the Groovy language is made available to users of Scripted Pipeline, which means it can be a very expressive and flexible tool with which one can author continuous delivery pipelines”. By the way, DSL here means “Domain Specific Language” what is very good described here at wikipedia.org. Practically use of properties For a configurational reason, in my cases, we are using the scripted pipeline type. First thing, the documentation of it on the official site includes round about 30 lines. If we take a deeper look at declarative pipelines it’s 10 times bigger :-D. So what, we have to get a running syntax to use the properties on top of scripted pipelines because we want to use cronjobs triggered by Jenkins. Inside the documentation of the declarative pipeline, it is described this way Jenkinsfile (Declarative Pipeline) pipeline { agent any triggers { cron(‘H 4/* 0 0 1-5’) } stages { stage(‘Example’) { steps { echo ‘Hello World’ } } } } But this won’t work inside the scripted pipeline, believe me, I’ve tested it :-D. It is possible to change properties and options if we make something like this here. properties( [ pipelineTriggers([cron(‘0 2 * * 1-3′)]) ] ) Inside this properties part, we can add as much “settings” and “parameters” we want. It’s separated by a comma and for a better reading by line. So you can add for example params or options like the log rotator. properties( [ [ $class: ‘BuildDiscarderProperty’, strategy: [$class: ‘LogRotator’, numToKeepStr: ’10’] ], pipelineTriggers([cron(‘H/30 * * * *’)]) ] )

Decrypt easySCP DB password

Ihr kennt vielleicht den Moment, ihr werde angesprochen oder habt selbst das Problem, dass man sein “root” Passwort vom MySQL Server verliert und entwendet – Passiert natürlich nie ;), oder aber ihr migriert von einer anderen Version easySCP. Wie dem auch sei, wenn ihr nun das Passwort nicht mehr haben solltet und aber trotzdem haben möchtet, dann gibt es hier einen kleinen aber sehr effizienten Trick das verschlüsselte Passwort von easySCP wieder zu entschlüsseln. Dafür benötigt ihr eigentlich nur euren “Key” und eure “IV” welche in der “config_DB.php” stehen, sowie das base64 verschlüsselte Passwort welches man haben möchte. Diese drei Werte müssen danach in dieses Code-Snippet eingefügt werden. Die stellen habe ich euch markiert. function decrypt_db_password($db_pass) { if ($db_pass == ”) { return ”; } if (extension_loaded(‘mcrypt’)) { $text = @base64_decode($db_pass . “\\n”); $td = @mcrypt_module_open(MCRYPT_BLOWFISH, ”, MCRYPT_MODE_CBC, ”); $key = “<strong>KEY</strong>”; $iv = “<strong>IV</strong>”; // Initialize encryption @mcrypt_generic_init($td, $key, $iv); // Decrypt encrypted string $decrypted = @mdecrypt_generic($td, $text); @mcrypt_module_close($td); // Show string return trim($decrypted); } else { throw new EasySCP_Exception( “Error: PHP extension ‘mcrypt’ not loaded!” ); } } echo decrypt_db_password(‘<strong>base64 PW</strong>’); Diese Datei muss (über den Browser erreichbar) für die Zeit der Abfrage in den Webroot gelegt werden. Ausführen im Browser und schon hat man das Passwort in Klartext vor sich. Die Datei sollte danach wieder gelöscht werden, da nun auch andere über die Datei das Passwort auslesen können. Was hier gemacht wird ist eigentlich relativ simpel, in der Funktion wird auf die selben Entschlüsselungsalgorythmen zugegriffen, welche auch easySCP nutzt. Hier sind es base64_decode sowie Teile von mcrypt, einer php basierten Verschlüsselung.